Machine Learning Infrastructure

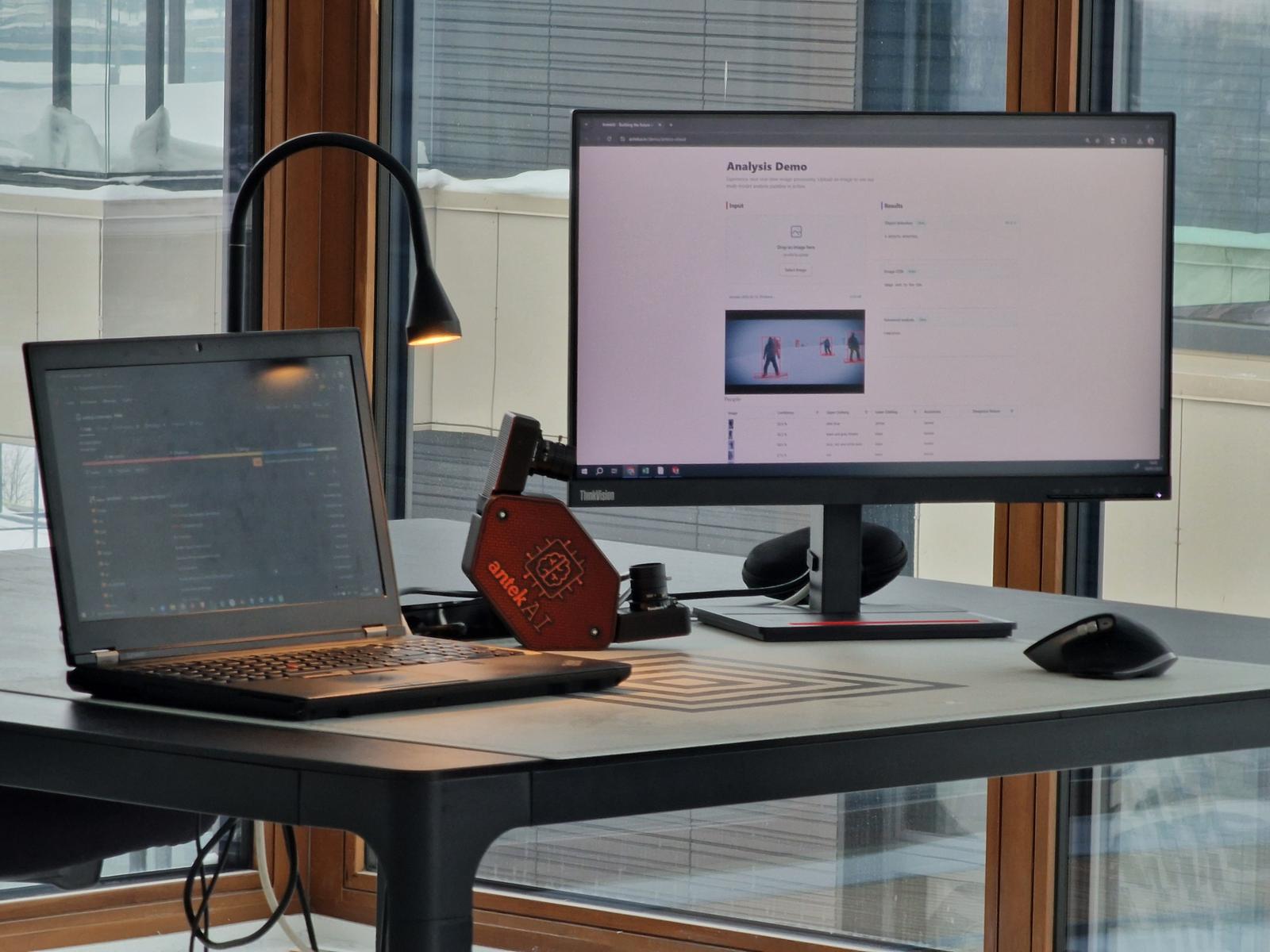

Computer Vision. Cloud to Edge.

Custom training. Any model. Full deployment stack.

Training

Custom models on your data

AI models can be designed for different tasks, like recognising things, drawing, processig text and much more. Training is about specializing them. Take an "AI artist model" for instance. Training is about teaching it to draw exactly how you need. Renaissance art? Disney animations? Anime?

Cloud

Scalable inference infrastructure

Where should your AI model be hosted? Most likely in the cloud. Why? because if you need to process 10 images per day, or 10 million, the setup does not change, it just scales. Models can be swapped or improved without touching your hardware.

Embedded

Edge deployment on Hailo, Axelera, Nvidia

Sometimes the AI needs to live inside the device itself. A camera, a sensor, a machine on a factory floor. This is usually required when it needs instant response, or requires the data to "stay in the box". No worries: AI models can be deployed on standalone hardware. We deploy AI models on Hailo, Axelera, and Nvidia accelerators—compact, power-efficient hardware for edge inference.

Fullstack

End-to-end ML pipelines

The AI model is just the brain. But you will often also need hardware (computing units, sensors or actuators), to interact with other digital systems (APIs), interfaces and the rest of the software that wrap around it. We build the whole body, not just the brain.

Edge deployment on industry-leading hardware

The Team

Who's behind antekAI?

Patrick

Marco

Francesco

Tommaso

We are a team of four Italians —Patrick, Marco, Francesco, and Tommaso— that are scattered across Europe, but based in Riga, Latvia. We geek out and experiment with technology of various nature: the kind of people that obsess over creating useful, interesting things. Our capabilities go beyond software, computers and hardware. Our interests also span mechanical design, audio, photography, chemistry, 3D & additive manufacturing, telecomms to name some. All of this, whilst having a passion for elemental simplicity.